|

I am a computer vision engineer at Apple, where I work on 3D computer vision and machine learning. Before that, I worked in DJI for about a year, working on 3D vision. I did my PhD at University College London, where I was advised by Lourdes Agapito and Chris Russell. Before that, I obtained my BSc and MSc from Northwestern Polytechnical University in 2009 and 2012 respectively, under the supervision of Prof. Yanning Zhang and Prof. Tao Yang. Email / CV / Google Scholar / GitHub |

|

|

I'm interested in computer vision, machine learning, optimization, deep learning and geometric vision. My research focus is about inferring the dynamic 3D scene structure from images or monocular video. I have also worked in multiple object detection, tracking, and synthetic aperture imaging. |

|

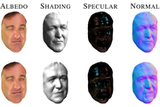

Qi Liu-Yin*, Rui Yu*, Lourdes Agapito, Andrew Fitzgibbon, Chris Russell Submitted to Special Issue of International Journal of Computer Vision We demonstrate the use of shape-from-shading (SfS) to improve both the quality and the robustness of 3D reconstruction of dynamic objects captured by a single camera. |

|

Qi Liu-Yin, Rui Yu, Lourdes Agapito, Andrew Fitzgibbon, Chris Russell British Machine Vision Conference (BMVC), 2016 (Best Poster) paper / website / code / data This paper is subsumed by our IJCV submission. |

|

Rui Yu, Chris Russell, Lourdes Agapito British Machine Vision Conference (BMVC), 2016 (Oral) paper / supplementary_material / longer version We propose a novel Linear Program (LP) based formulation for solving jigsaw puzzles. In contrast to existing greedy methods, our LP solver exploits all the pairwise matches simultaneously, and computes the position of each piece/component globally. |

|

Rui Yu, Chris Russell, Neill D. F. Campbell, Lourdes Agapito International Conference on Computer Vision (ICCV), 2015 paper / website / code / longer version In this paper we tackle the problem of capturing the dense, detailed 3D geometry of generic, complex non-rigid meshes using a single RGB-only commodity video camera and a direct approach. |

|

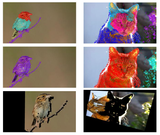

Chris Russell*, Rui Yu*, Lourdes Agapito European Conference on Computer Vision (ECCV), 2014 (Oral) paper / website / code / longer version In this paper we propose an unsupervised approach to the challenging problem of simultaneously segmenting the scene into its constituent objects and reconstructing a 3D model of the scene. |

|

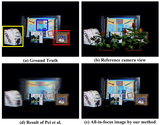

Tao Yang, Yanning Zhang, Jingyi Yu, Jing Li, Xiaomin Tong, Rui Yu European Conference on Computer Vision (ECCV), 2014 paper In this paper, we present a novel depth free all-in-focus SAI technique based on light field visibility analysis. |

|

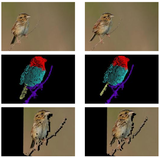

Tao Yang, Yanning Zhang, Rui Yu, Xiaoqiang Zhang, Ting Chen, Lingyan Ran, Zhengxi Song, Wenguang Ma Image and Vision Computing, 2014 paper Automatically focusing and seeing occluded moving object in cluttered and complex scene is a significant challenging task for many computer vision applications. In this paper, we present a novel synthetic aperture imaging approach to solve this problem. |

|

Tao Yang, Yanning Zhang, Rui Yu, Ting Chen International Journal of Advanced Robotic Systems, 2013 paper Automatically focusing and seeing occluded moving object in cluttered and complex scene is a significant challenging task for many computer vision applications. In this paper, we present a novel synthetic aperture imaging approach to solve this problem. |

|

Tao Yang, Yanning Zhang, Xiaomin Tong, Xiaoqiang Zhang, Rui Yu International Conference on Computer Vision and Pattern Recognition (CVPR), 2011 paper Robust detection and tracking of multiple people in clut- tered and crowded scenes with severe occlusion is a sig- nificant challenging task for many computer vision appli- cations. In this paper, we present a novel hybrid synthetic aperture imaging model to solve this problem. |

|

|

|

In this thesis, we present two pieces of work for reconstructing dense generic shapes from monocular sequences. In the first work, we propose an unsupervised approach to the challenging problem of simultaneously segmenting the scene into its constituent objects and reconstructing a 3D model of the scene. In the second work, we propose a direct approach for capturing the dense, detailed 3D geometry of generic, complex non-rigid meshes using a single camera. |

|

|

|

|

|

|

|

|

|

|