Kaleidoscope investigates dynamic models of interaction. Taking point of departure in the conceptualisation of the interface as a site for learning, Kaleidoscope aims to create an intelligent interface where the parameters of interaction are set in an evolving relationship to the parameters of the digital environment.

In the Periscope, I see what you hear and Spawn the interface is seen as an environment for action, a medium through which new actions are learnt and incorporated. Kaleidoscope probes the thinking of the interface as an evolving environment for learning. What happens when the interface learns from the actions of the user? What are the parameters of affect as user adapts to the system and system adapts to the user?

Evolving ecologies, complex systems, iterative systems, non-linear dynamic systems, phase space

The core theme in Kaleidoscope is the establishing of a dynamic relationship between user and environment, an evolving system where both intelligences learn to respond to one another.

Kaleidoscope is primarily concerned with the making of an intelligent interface where the mapping between the parameters of interaction are set in a changing relationship to the parameters of digital environment. This dynamic mapping allows a flow between the interactions of the user and the reactions of the digital environment.

In the project I see what you hear (CAVE experiment) it was experienced that users would invent new actions which exceeded or extended the designed interactions of the system. Users would learn to create short or sharp sounds so as to clear the space (drawing new splines outside the display environment of the CAVE) and allow for a fresh start. As well as learning to control the parameters of pitch, volume and movement interaction, user found inventive ways of manipulating the system so as to interact in a manner which was meaningful to them. These new, or unpredicted actions, were understood as action on par with the intended interactions of the system.

Kaleidoscope seeks explore the formation of new interactions. By releasing the digital from a pre-conceived model of intuitive interaction (mapping) the aim is to explore if and how the user can create an understanding of her affect on the system.

The programme seeks to guess repeated

gestures (interactions with the physical interface) and map simple these

to digital events.

This could be done through the building of a table (array) that caches the

gestures and categorises them. As the user repeats actions the ‘solidity’

of the gesture is enforced.

Question:

Could we use reinforcement learning as a paradigm?

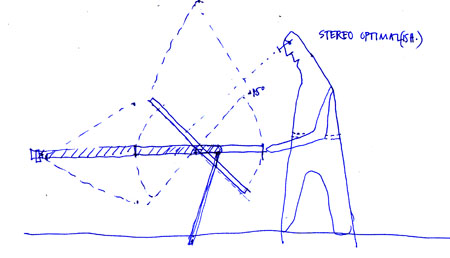

The prototype is conceived as a very simple physical interface to be manipulated

by the hand only. Conceived as a movable screen the interface merges interaction

and display into one thereby creating a strong link between doing and seeing.

The screen is tracked by a camera (employing code from Spawn) which sees the

screen being moved around a central pivot. The different ways of manipulating

the screen (slow, fast, jerky, smooth (changes to the set parameters of velocity,

time, position)) allows the user to interact in different ways. Learning from

the Periscope installation it is expected that users will engage with the

interface in very different ways despite it having only a single parameter

for movement around the central pivot.

Parameters of interaction:

Tilt over time creates the parameters of smoothness (jerky/smooth),

speed (slow/still/fast), degree (small/large

movement) and position (where within the totality

of the pan the interaction takes place).

The idea for the visualisation is a set of virtual objects affected by the way the interaction is are done (learning from Giver of Names). As the user manipulates the screen around the central pivot point the virtual objects tumble and fall as is affected by a mutual (mixed) sense of gravity. The aim for the visualisation is it should be dynamic but simple enough to allow us to understand the learning of the interface. A second aim is to create correlations between the physical and the digital environment through the perceived dynamics of the environment (e.g. through the force of gravity). This is a simple Mixed Reality construct.In the first stage this sense of gravity determines the behaviour the entirety of the system.

This gives the output parameters of: Self movement creates the parameters of fall down (slide/roll), bounce (high/low), friction (high/low), speed (slow/still/fast).

Further parameters could be: Growth (big/small object), transparency, trace, colour.

In the second stage the objects might form connections (springs) to each other (here learning from the Biotica project by Richard Brown, as well as Carl Simms and the motions of the double pendulum). These might be rule based allowing for connections to form and dissapate across the time of the experiment. Learning from Biotica’s bions new dynamic variable could be: Charge, Mass, Velocity, Size, Neural value (?), Colour, Reproduction, Death, Form spring, Form neural connection, Form skin.

I would like to test whether we can use passive stereo as a display. This would work well for seeing the bounce of the digital objects.

The idea of thinking the system as the entirety of the experience including both the digital environment and the user as well as interface it self.

Both input and output parameters are dynamic properties of the system rather

than formal qualities of the environment.

Sand box - top down projection (or bottom up) – the problem here is

that the projection will be stable (good for stereo) which means that the

interactions have to affect the setting of the virtual camera (this is interesting

in it self – but the delay might be a bit weird).