What is the Cloud?

Extract from "Software Architecture and Clouds" [ link ]

Until recently, operating systems managed the allocation of physical resources, such as CPU time, main memory, disk space and network bandwidth to applications. Virtualisation infrastructures, such as Xen and VMWare are changing this by introducing a layer of abstraction known as a hypervisor. A hypervisor runs on top of physical hardware, allocating resources to isolated execution environments known as virtual machines, which run their own individual virtualised operating system. Hypervisors manage the execution of these operating systems, booting, suspending or shutting down systems as required. Some hypervisors even support replication and migration of virtual machines without stopping the virtualised operating system.

It turns out that the separation between resource provision and operating systems introduced by virtualisation technologies is a key enabler for cloud computing. Compute clouds provide the ability to lease computational resources at short notice, on either a subscription or pay-per-use model and without the need for any capital expenditure into hardware. A further advantage is that the unit cost of operating a server in a large server farm is lower than in small data centres. Examples of compute clouds are Amazon’s Elastic Compute Cloud (EC2) or IBM’s Blue Cloud. Organisations wishing to use computational resources provided by these clouds supply virtual machine images that are then executed by the hypervisors running in the cloud, which allocate physical resources to virtualised operating systems and control their execution.

In this paper we review the implications of the emergence of virtualisation and compute clouds for software engineers in general and software architects in particular. We find that software architectures need to be described differently if they are to be deployed into a cloud. The reason is that scalability, availability, reliability, ease of deployment and total cost of ownership are quality attributes that need to be achieved by a software architecture and these are critically dependent upon how hardware resources are provided. Virtualisation in general and compute clouds in particular provide a wealth of new primitives that software architects can exploit to improve the way their architectures deliver these quality attributes.

The principal contribution of this paper is a discussion of architecture definition for distributed applications that are to be deployed on compute clouds. The key insight is that the architecture description needs to be reified at run-time so that it can be used by the cloud computing infrastructure in order to implement, monitor and preserve architectural quality goals. This requires several advances over the state of the art in software architecture. Architectural constraints need to be made explicit so that the cloud infrastructure can obey these when it allocates, activates, replicates, migrates and deactivates virtual machines that host components of the architecture. The architecture also needs to describe how and when it responds to load variations and faults; we propose the concept of elasticity rules for architecture definitions so that the cloud computing infrastructure can replicate components and provide additional resources as demand grows or components become unavailable. We evaluate these primitives experimentally with a distributed computational chemistry application.

RESERVOIR aims to satisfy the vision of service oriented computing by distinguishing and addressing the needs of Service Providers, who understand the operation of particular businesses and offer suitable Service applications, and Infrastructure Providers, who lease computational resources in the form of a cloud computing infrastructure. This infrastructure provides the mechanisms to deploy and manage self contained services, consisting of a set of software components, on behalf of a service provider.

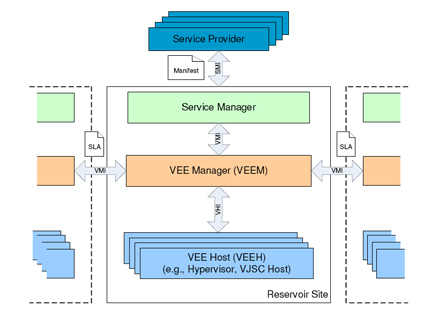

The architecture is shown in the figure above. A partial implementation of the architecture (in particular, the VEEM layer), which we have used in our experimental evaluation described below is publicly available from OpenNebula.org. It is also included from release 9.04 in the Ubuntu Linux distribution.

RESERVOIR relies on generally available virtualisation products, such as Xen, VMWare or KVM. The lowest layer of the RESERVOIR architecture is the Virtual Execution Environment Environment Host (VEEH). It provides plugins for different hypervisors and enables the upper layers of the architecture to interact with heterogeneous virtualisation products. The layer above is the Virtual Execution Environment Manager (VEEM), which implements the key abstractions needed for cloud computing. A VEEM controls the activation of virtualised operating systems, migration, replication and de-activation. A VEEM typically controls multiple VEEHs within one site. The key differentiator from other cloud computing infrastructure is RESERVOIR’s ability to federate across different sites, which might be implementing different virtualisation products. This is achieved by cross-site interactions between multiple different VEEMs operating on behalf of different cloud computing providers. This supports replication of virtual machines to other locations for example for business continuity purposes. The highest level of abstraction in the RESERVOIR architecture is the Service Manager. While the VEEM allocates services according to a given placement policy, it is the Service Manager that interfaces with the Service Provider and ensures that requirements (e.g. resource allocation requested) are correctly enforced. The Service Manager also performs other service management tasks, such as accounting and billing of service usage.

More available [ here ].