|

zxhproj v 2.2

zxhproj

|

|

zxhproj v 2.2

zxhproj

|

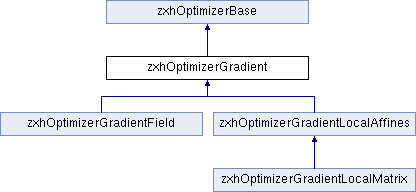

optimizers search for the optimal value of the metric within the transformation follow gradient direction More...

#include <zxhOptimizerGradient.h>

Public Member Functions | |

| zxhOptimizerGradient (void) | |

| constructor | |

| ~zxhOptimizerGradient (void) | |

| deconstructor | |

| virtual void | SetTransform (zxhTransformBase *p) |

| will new a gradient type using transformation type | |

| virtual void | SetGradientClassUsingObjectType (zxhTransformBase *pClassObject) |

| set gradient type | |

| virtual std::string | GetPrintString () |

| virtual bool | SetOptimizerFromStream (std::ifstream &ifs) |

| from stream | |

| virtual zxhOptimizerBase * | Clone (zxhOptimizerBase *&pRet) |

| virtual void | SetRegularRate (float f) |

| gradient optimizer property | |

| virtual float | GetRegularRate (void) |

| get | |

| virtual void | SetSearchLength (float f) |

| set | |

| virtual float | GetSearchLength (void) |

| get | |

| virtual void | SetRegularMinStepLength (float f) |

| set | |

| virtual float | GetRegularMinStepLength (void) |

| get | |

| virtual void | SetRegriddingPortion (float f) |

| set | |

| virtual float | GetRegriddingPortion (void) |

| get | |

| virtual void | Run () |

| for running registration to search optimal | |

| virtual float | AdvanceAlongGradient (zxhTransformBase *pGradientInfCur) |

| return no. of steps advanced (may advance fractional steps in future) | |

| virtual void | SetSearchOptimalAlongGradient (int i) |

| virtual int | GetSearchOptimalAlongGradient () |

| virtual void | SetMaxLineSearchStep (int n) |

| virtual int | GetMaxLineSearchStep () |

| virtual bool | AdjustParameters (bool forceconcatenation=false) |

| virtual bool | ConcatenateTransforms () |

| this is fluid regridding or ( forward composition in localmatrix) | |

| virtual bool | ConcatenateFinalTransforms () |

| virtual bool | ConcatenateTransformsNUpdate () |

Protected Member Functions | |

| virtual float | ComputeAndUpdateConjugateDirection (zxhTransformBase *gk_1, zxhTransformBase *gk, zxhTransformBase *dk_1, zxhTransformBase *dk) |

| g(k-1),g(k), d(k-1), d(k) from TMI, vol16,no12,pp2879 | |

Protected Attributes | |

| zxhGradientBase * | m_pGradient |

| float | m_fRegularRate |

| float | m_fSearchLength |

| float | m_fRegularMinStepLength |

| float | m_fRegriddingPortion |

| int | m_iSearchOptimalAlongGradient |

| int | m_nMaxLineSearchStep |

optimizers search for the optimal value of the metric within the transformation follow gradient direction

| bool zxhOptimizerGradient::AdjustParameters | ( | bool | forceconcatenation = false | ) | [virtual] |

adjust transform, metric, gradient, differentialstep, ...., Concatenation 1) normal FFDs one2one or ZXHTO test jacobian 2) directional FFDs (set m_pConcatenatedTransformsByRegridding ) return whether regridding

Reimplemented in zxhOptimizerGradientLocalAffines.

| zxhOptimizerBase * zxhOptimizerGradient::Clone | ( | zxhOptimizerBase *& | pRet | ) | [virtual] |

Reimplemented from zxhOptimizerBase.

Reimplemented in zxhOptimizerGradientField, zxhOptimizerGradientLocalAffines, and zxhOptimizerGradientLocalMatrix.

| std::string zxhOptimizerGradient::GetPrintString | ( | ) | [virtual] |

| virtual int zxhOptimizerGradient::GetSearchOptimalAlongGradient | ( | ) | [inline, virtual] |

0 only advance one step --- defaul 1 advance one step and then forward search until maximal without setting m_bStop 2 search optimal along the gradient and set m_bStop if curr is optimum

| void zxhOptimizerGradient::Run | ( | ) | [virtual] |

for running registration to search optimal

1. check to stop if non-increase for some steps

2. compute gradient

3. regular gradient ascent

5. advance along gradient

4. normalise gradient for heart reg

Implements zxhOptimizerBase.

Reimplemented in zxhOptimizerGradientField, and zxhOptimizerGradientLocalAffines.

| virtual void zxhOptimizerGradient::SetSearchOptimalAlongGradient | ( | int | i | ) | [inline, virtual] |

0 only advance one step --- defaul 1 advance one step and then forward search until maximal without setting m_bStop 2 search optimal along the gradient and set m_bStop if curr is optimum

float zxhOptimizerGradient::m_fRegriddingPortion [protected] |

float zxhOptimizerGradient::m_fRegularMinStepLength [protected] |

float zxhOptimizerGradient::m_fRegularRate [protected] |

float zxhOptimizerGradient::m_fSearchLength [protected] |

int zxhOptimizerGradient::m_iSearchOptimalAlongGradient [protected] |

0 only advance one step --- defaul 1 advance one step and then forward search until maximal without setting m_bStop 2 search optimal along the gradient and set m_bStop if curr is optimum