My work in mainly in the areas of inverse problems, numerical analysis and scientific computing. In partiular, recently I became interested in combining model and data based approaches to togmographic reconstruction.

I am also teaching Numerical

Optimization (COMPGV19) .

Below are some example projects I am offering. If you are intersted

please contact me to dicuss the details. Note this are examples but I

am happy to supervise other projects related to my research.

Complementary information reconstruction via directional total variation

Pre-requisites: Good background in mathematics and some knowledge of Numerical Optimisation. Acquintance with Inverse Problems is beneficial but not necessary. Matlab.

In [1] we developed a directional total variation functional which allows for explicit incorporation of edge location and direction into the regularisation functional. In [2] the authors have proven that this functional is indeed a regularisation functional.

In many imaging applications it is common to acuire multiple constrast images of the same object. We in particular consider dual energy CT. For simplicity we will use 2d problem with parallel geometry which is equivalent to Radon transform.

In this project we consider a joint reconsturction problem using the complementary information under the assumption of a common set of egdes between two X-ray images aquired at different energy levels. Furthermore, we are going to assume that each of the measurements have been acuired at a disjoint subset of measurements which together constitute a complete set (hence the complenantary information).

Informed by microlocal analysis, we can formulate this joint peoblem with complementary directional total variation regularisation.

The plan:

- perform numerical simulations

- attempt to produce a proof of regularisation based on the result in [2] - help will be available

- if all works help with writing a publication

Rerefences:

[1] https://epubs.siam.org/doi/pdf/10.1137/15M1047325

[2] https://iopscience.iop.org/article/10.1088/1361-6420/aab586/meta

Complementary reconstruction via Curvelet decomposition with complementary learning

Pre-requisites: Good background in mathematics and some knowledge of Numerical Optimisation. Acquintance with Inverse Problems is beneficial but not necessary. Matlab.

We consider the problem of joint reconstruction of multi-contrast (dual) images under an assumption of a common set of underlying singularities (edges). Examples of such multi-contrast problems are dual contrast X-ray tomography or multi wavelength illumination in PAT.

In particular we want to study reconstruction from a "complementary" data of two contrasts, as it is our hypothesis that this is sufficient for a stable joint reconstruction in this case. The complementary measurements, refer to two sets of measurements which are disjoint while their union forms a full set of measurements for a single contrast.

Mircrolocal analysis tells us that the Curvelet frame allows a decomposition of the image and data into visible and invisible singularities (the theory does not cover the isotropic lowest scale). Thus for each contrast we can reconstruct the ?visible? coefficients.

The objective of this project is to study how the two "visible" partial reconstructions can be put together to obtain a joint (cross-informed) reconstruction for both constrast.

In principle the "visible coefficients" for both contrast constitute the support of the sparse coefficients for the joint reconstruction. Solving the least squares problem on this support should determine coefficients for each contrast (the invisible ones, but also de-bayes the visible ones). The isotropic coefficients may all need to be included into this step as their support can only be determined via thresholding which could not be robust.

The initial assumption of a common set of singularities can be relaxed to a common subset of singularities.

Our hypothesis is that this data is not sufficient for a reconstruction, as some singularites will not be measured. The invisible could be filled in via supervised learning on a representative set of labeled dual contrast data. In this model the neural network should learn the coherence between the two contrasts, in particular to predict which singularities are in common and which not.

Rerefences:

[1] https://iopscience.iop.org/article/10.1088/1361-6420/ab10ca/meta

Architecture inspired solvers: multi-scale learnt methods

Pre-requisites: Good background in mathematics. Acquintance with Inverse Problems is beneficial but not necessary. Python or Matlab.

In the last few years, neural networks has been successfully deployed for image reconstruction. The most promising approach is to unroll a small number of steps of an iterate method e.g. proximal gradient method, and replace the proximal steps with neural network which coefficients are trained on a set of relevant labeled data, see eg [1]. The networks which are used are usually quite small.

In this unrolled mode, the resulting network lacks the multi-level/scale structure which is present in both multi-level solves (e.g. multi-grid) and net architectures usually used for imaging applications [3].

In [2] the authors attempted to rectify it by using a series of multi-scale solves and networks. We would like to try an alternative idea where the entire "reconstruction network" resembles Unet architecture and the forward / adjoint / gradient operators are applied at different scales following the ?down/up? sampling operators.

If learning of the prior is of interest (rather than learning to reconstruct), the network should be fixed for all the unrolled iterations. A multi scale realisation of such idea would again follow an "U" shape and restrict the training of netwrork parameters in each unrolled iteration to the corresponding layer of the Unet.

If you prefer the unrolled iteration approach, we have a number of ideas to test out here as well.

References:

[1] https://iopscience.iop.org/article/10.1088/1361-6420/aa9581/pdf

[2] https://arxiv.org/pdf/1908.00936.pdf

[3] https://arxiv.org/pdf/1505.04597.pdf

Infimal convolution for multi-functional regularisation

Pre-requisites: Good background in mathematics and numerical optimisation. Acquintance with Inverse Problems is beneficial but not necessary. Matlab.

Inverse problems involve getting from some measurements back to the quantity of interest. The simplest inverse problem is the solution of a linear system A x = b. However, in typical inverse problems the forward operator (here the matrix A) is ill posed (ill-conditioned), which amounts to some information loss. To stably solve an inverse problem this information needs to be fed in usually in a form of a prior (regulariser). While there are much loved and used powerful regularisation functionals such as e.g. total variation, smoothness, sparsity, a typical medical image is not strictly adherent to any such prior and in many cases a mix of such functionals would lead to a more realistic result. Infimal convolution is a way of composing regularisation functionals together. In this project we want to investigate efficient and flexible methods for infimal convolution of different regularisers and provide efficient implementation.

References:

[1] https://pdfs.semanticscholar.org/111d/88259a679f519909d6b23f9414ea6ca4edbc.pdf

[2] https://imaging-in-paris.github.io/semester2019/slides/w1/bredies.pdf

[3] https://www.ncbi.nlm.nih.gov/pmc/articles/PMC4944669/pdf/10851_2015_Article_624.pdf

Robust learning via Orthogonal Nets

Pre-requisites: Good background in mathematics and numerical optimisation. Acquintance with Inverse Problems is beneficial but not necessary. Python or Matlab.

For all the success of the deep learning, it still lacks the provable robustness (deep fool etc), which is indispensable in many applications e.g. security or health. One of the options to overcome this problems is to explicitely bound the Lipschitz constant of the network. The most stringent bound would correspond to choosing the weight matrices to be orthogonal. The challenge of course is that now the optimisation is restricted to the Stiefel manifold of orthogonal matrices.

The goal of this project is to develop an orthogonal version of popular architectures e.g. Unet, and implement agorithms for optimisation on the Siefel manifold. We are interested in both approaches: strictly enforicing the manifold and as well as in approximative approaches.

References:

[1] https://arxiv.org/abs/1905.05929

Robust learning via Hamiltonian ODEs

Pre-requisites: Good background in mathematics and numerical optimisation. Acquintance with Inverse Problems is beneficial but not necessary. Python or Matlab.

For all the success of the deep learning, it still lacks the provable robustness (deep fool etc), which is indispensable in many applications e.g. security or health. Deep networks have been interpreted as ODEs. In particular, the ODEs could correspond to the conservative Hamiltonian system which would result in a stable and robust network.

The goal of this project is to research the available approaches, implement and optimise a Hamiltonian network.

References:

[1] https://arxiv.org/abs/1804.04272

Graph based manifold learning for dynamic inverse problems

Pre-requisites: Good background in mathematics and numerical optimisation. Acquintance with Inverse Problems is beneficial but not necessary. Matlab.

Dynamic inverse problems occur when the quantity of interest is time dependent. In most cases in dynamic inverse problems acquisition of per frame complete data is infeasible due to either time scale of the underlying dynamics relative to the acquisition time or due to other considerations such as the delivered dose of harmful ionising radiation. Canonical examples of dynamic inverse problem are cardiac MRI or X-ray CT affected by respiratory motion.

The goal of this project is to verify the hypothesis that dynamics can be represented as a manifold. To this end we would like to use some manifold learning methods such as e.g. UMAP algorithm on a set of hold breath cardia MRI images / data. To construct the underlying graph we need a meaningful metric between the frames of a dynamic image / data. A Wasserstein distance measures the cost of transporting one distribution into another, and seems a good candidate to for such a metric.

References:

[1] https://arxiv.org/pdf/1802.03426.pdf

[2] https://arxiv.org/pdf/1710.10898.pdf

DeepLabCut adaptation for dynamic CT of foot and ankle

Pre-requisites: Good background in mathematics. Acquintance with Inverse Problems is beneficial but not necessary. Matlab, Python.

In dynamic X-ray CT of foot and ankle we seek to reconstruct the motion of the 28 bones in this structure while executing some pre-specified motion sequence. Due to use of ionising radiation, only minimal number of projections per frame can be taken (in the current protocol we use only 3 projections per frame). While this seems very few, each of the 28 bones effectively undergoes a rigid body motion meaning that the dimensionality of the underlying motion manifold is max 6x28 which is orders of magnitude lower than the dimension of the 4D CT image. Some preliminary results are variational reconstructions are given in [1].

DeepLabCut is a "pre-trained" deep neural net which is very successful for markerless 3D pose estimation from multi-camera natural images which cam be adapted to own problem with minimal retraining. The idea is to use DeepLabCut (or a modification thereof) along with 3 X-ray projections to estimate the 3D pose of the individual bones over time.

References:

[1] https://www.spiedigitallibrary.org/conference-proceedings-of-spie/11072/1107208/Application-of-Proximal-Alternating-Linearized-Minimization-PALM-and-inertial-PALM/10.1117/12.2534827.short?SSO=1

[2] http://www.mousemotorlab.org/deeplabcut

Data analysis of historical grain speciments: joint with Archeology

Co-supervised by Charlene Murphy (Archeology)

Pre-requisites: Machine learning, beneficial: data analysis and image processing

The archeology deparment has a number of data sets of historical grains (stretching over milenia) both photographic and synchrotron data (high resolution X-ray images). Such data are aquired to verify certain hypothesis about the evolution of the grains over different historical periods. Example question would be to investigate thinkness evolution of the shell of a soya bean. It would involve estimation of the thickness of the shell from synchroctron data / images.

Past projects:

Iterative networks

![]()

Co-supervised by Andreas Hauptmann

Pre-requisites: Matlab and some knowledge of Numerical Optimisation. Acquintance with Inverse Problems is beneficial but not necessary.

Work summary:

The student will develop and implement a flexible software framework for "deep iterative networks" i.e. networks which consist of a number of layers which combine application of the forward or adjoint operator and convolutional and ReLU operations.

more coming soon ...

Scientific aims:

Overview:

References:

[1] http://

Manifold learning for dynamic inverse problems

![]()

Co-supervised by Andreas Hauptmann

Pre-requisites: Matlab and some knowledge of Numerical Optimisation. Acquintance with Inverse Problems is beneficial but not necessary.

Work summary:

The student will implement an algorithm integrating manifold learning in kernel framework as a regulariser into dynamic image reconstruction.

more coming soon ...

Scientific aims:

Overview:

References:

[1] http://

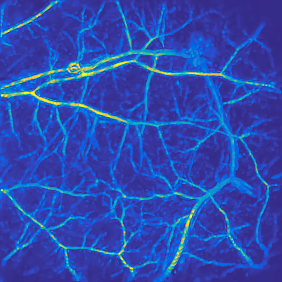

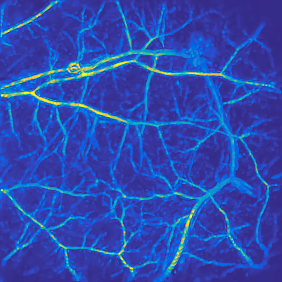

Vessel promoting regularisation via Hessian Schatten

norms

Co-supervised by Felix Lucka

Pre-requisites: Matlab and some knowledge of Numerical Optimisation and Inverse Problems

Work summary:

The student will develop and implement a vessel promoting regularisation method based on vessel filter which utilises image curvature (Hessian) information. The regularisation method will be tested on photoacoustic tomography problem on simulated and in-vivo data.

Scientific aims:

Vascular structure present a challenge to popular regularisers like e.g. total variation or sparsity in most of known frames. On the other hand vessel filtering has proven to be successful post processing technique for such images. The aim is to develop a convex regulariser which mimics the action of a vessel filter for use in variational reconstruction.

Overview:

The Hessian based vessel filter [1] is a popular tool in post processing photoacoustic vasculature images [2]. The basic idea involves pre-smoothing of the images to extract appropriate scale and subsequently using curvature information to characterise the structures in the image as e.g. vessel or plate based on the singular values of the Hessian.

The aim of this project is to develop this post processing tool into a regularisation functional. To this end a family of Hessian Schatten norms will be investigated to provide models for different structures.

The resulting problem can then be solved for instance by a primal dual method [3].

References:

[1] http://link.springer.com/chapter/10.1007%2FBFb0056195

[2] http://spie.org/Publications/Proceedings/Paper/10.1117/12.2005988

[3] http://arxiv.org/pdf/1209.3318.pdf

Transmission and reflection mode for Ultrasound Computed Tomography (USCT) with incident planar waves

Co-supervised by Ben Cox

Pre-requisites: Matlab and some knowledge of Numerical Optimization

and Inverse Problems

Work summary:

The student will implement the transmission and reflection problem for Ultrasound Computed Tomography (USCT) using k-wave Toolbox [1]. They will perform numerical experiments to ascertain the limitations of such system in different scenarios including simultaneous recovery of sound speed and density, and limited number of measurements.

Scientific aims:

The majority of the USCT systems use small, point-like ultrasound sources and measure in transmission mode. The goal of this project is to simulate a USCT system which uses plane wave as sources in both transmission and reflection mode and investigate the discriminative properties of such system for sound speed and density recovery.

Overview:

Preclinical imaging of small animals is crucial for the study of disease and in the development of new drugs. Photoacoustic tomography is an emerging imaging modality that shows great promise for preclinical imaging, but the resolution of the images is limited by the heterogeneities in the acoustic properties of the tissue, such as the sound speed. One way to overcome this would be to measure (quantitatively image) the properties and incorporate them into the photoacoustic reconstruction algorithm. Ultrasound computed tomography (USCT) is one way in which the spatially-varying acoustic properties of a medium may be imaged quantitatively. At UCL there is an existing pre-clinical photoacoustic imaging system based on a planar scanner that could potentially be adapted to make USCT measurements. It is common, in USCT, to use small, point-like, sources of ultrasound but with this scanner it may be easier to use plane ultrasonic waves as sources. This project will explore how USCT images can be recovered from measurements of the scattered, or transmitted, waves when the incident waves are planar

Directional total variation image reconstruction models for limited-view X-ray and photoacoustic tomography

Co-supervised by Felix Lucka

Pre-requisites: Matlab and some knowledge of Numerical Optimisation and Inverse Problems

Work summary:

The student will develop, implement and evaluate total-variation based imaging models that take into account that in limited-view tomography applications - we will consider X-ray computed tomography (CT) and photoacoustic tomography (PAT) - the imaging modality has a non-uniform sensitivity to feature edges. First, a variational image reconstruction algorithm using the conventional, isotopic TV energy will be implemented with the primal-dual hybrid gradient algorithm. Then, the TV energy will be locally modified to account for the different sensitivity of the measurement device towards edges at this location and potential benefits of this modification will be evaluated on simulated data scenarios. Finally, results for experimental data from sub-sampled, limited-view PAT will be computed.

Scientific aims:

A qualitative and quantitative improvement of the reconstructed images in limited-view CT and PAT.

Overview:

Limited view tomography applications like CT or PAT typically suffer from a locally-varying reduced sensitivity towards certain image features, most notably edges, while simultaneously introducing unwanted artefacts into the reconstruction. While it is possible to precisely characterize this sensitivity and even understand the nature and appearance of the artefacts through micro-local analysis (e.g., [1]), few image reconstruction approaches make use of this information. Total variation (TV) based regularization [2] is a popular image reconstruction technique to reliably detect and recover edges in challenging tomographic applications, but in its general form, it is ignorant towards the edges direction, which leads to sub-optimal results in the limited-view settings described above (see, e.g., [3,4] ). We want to investigate how to optimally modify TV-based methods locally to account for the different directional sensitivity of the measurement device (see, e.g., [5] for one approach how to locally adapt TV methods). The resulting optimization problems will be solved with the primal dual hybrid gradient algorithm [6].

References:

[1] http://epubs.siam.org/doi/abs/10.1137/140977709

[2] http://www.springer.com/cda/content/document/cda_downloaddocument/9783319017112-c1.pdf

[3] http://arxiv.org/abs/1602.02027

[4] http://arxiv.org/abs/1605.00133

[5] http://ieeexplore.ieee.org/stamp/stamp.jsp?arnumber=6527975

[3] http://link.springer.com/article/10.1007/s10851-010-0251-1