Video Conference CONTROL standards

H221 is the most important control standard when considered in the context of equipment designed for ISDN specially current hardware video CODECs. It defines the frame structure for audiovisual services in one or multiple B or H0 channels or single H11 or H12 channel at rates of between 64 and 1920 Kbit/s. It allows the synchronization of multiple 64 or 384 Kbit/s connections and dynamic control over the subdivision of a transmission channel of 64 to 1920 kbit/s into smaller subchannels suitable for voice, video, data and control signals. It is mainly designed for use within synchronized multiway multimedia connections, such as video conferencing.

H221 was designed specifically for usage over ISDN. A lot of problems arise when trying to transmit H221 frames over PSDN.

Due to the increasing number of applications utilizing narrow (3KHz) and wideband (7KHz) speech together with video and data at different rates, a scheme is recommended by this standard to allow a channel accommodates speech and optionally video and/or data at several rates and in a number of different modes. Signaling procedures for establishing a compatible mode upon call set-up, to switch between modes during a call and to allow for a call transfer, is explained in this standard.

Each terminal would transfer its capabilities to the other remote terminal(s) at call set-up. The terminals will then proceed to establish a common mode of operation. A terminal capabilities consist of : Audio capabilities, Video

capabilities, Transfer rate capabilities , data capabilities, terminals on restricted networks capabilities and encryption and extension-BAS capabilities.

This standard is mainly concerned with the control and indication signals needed for the transmission of frame-synchronous or requiring rapid response. Four categories of control and indication signals have been defined, first one related to video, second one related to audio, third one related to maintenance purposes and the last one is related to simple multipoint conferences control (signals transmitted between terminals and MCU’s).

H.320 covers the technical requirements for narrow-band telephone services defined in H.200/AV.120-Series recommendations, where channel rates do not exceed 1920 kbit/s.

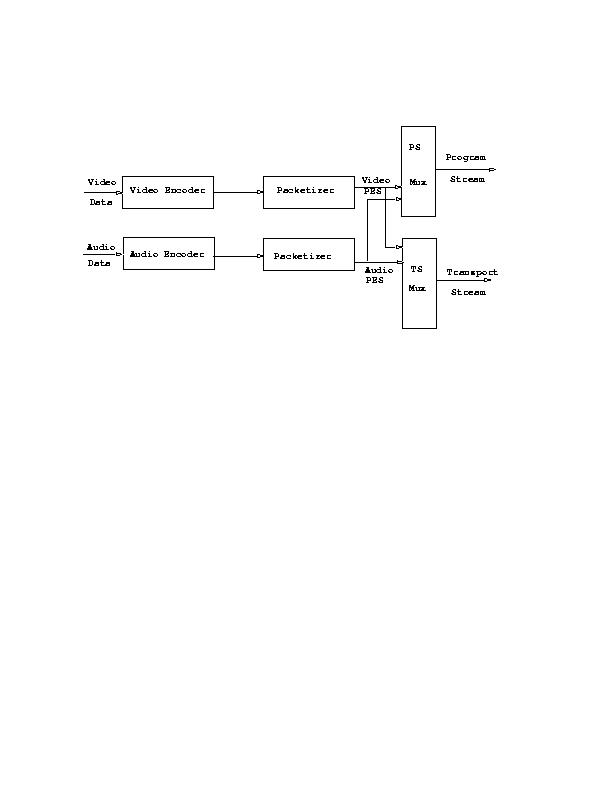

MPEG systems part is the control part of the MPEG standard. It addresses the combining of one or more streams of video and audio as well as other data, into a single or multiple streams which are suitable for storage or transmission. The figure below shows a simplified view of the MPEG control system.

Packetised Elementary Stream (PES)

PES stream consists of a continuous sequence of PES packets of one elementary stream. The PES packets would include information regarding the Elementary clock reference and the Elementary stream rate. The PES stream is not defined for interchange and interoperability though. Both fixed length and variable length PES packets are allowed.

The diagram illustrates the components of the MPEG Systems module:

Picture: mpeg-systems

There are two data stream formats defined: the Transport Stream, which can carry multiple programs simultaneously, and which is optimized for use in applications where data loss may be likely (e.g. transmission on a lossy network), and the Program stream, which is optimized for multimedia applications, for performing systems processing in software, and for MPEG-1 compatibility.

The basic principle of MPEG System coding is the use of time stamps which specify the decoding and display time of audio and video and the time of reception of the multiplexed coded data at the decoder, all in terms of a single 90kHz system clock. This method allows a great deal of flexibility in such areas as decoder design, the number of streams, multiplex packet lengths, video picture rates, audio sample rates, coded data rates, digital storage medium or network performance. It also provides flexibility in selecting which entity is the master time base, while guaranteeing that synchronization and buffer management are maintained. Variable data rate operation is supported. A reference model of a decoder system is specified which provides limits for the ranges of parameters available to encoders and provides requirements for decoders.

Putting this on the Desktop, on the Internet

So what happens when we want to put all of this onto our desktop system? There are impacts on the whole architecture, for processor, bus, I/O, storage devices and so on. At the time of writing this course, even with the massive advances in processor and bus speed (e.g. Pentium and PCI), we are still right on the limits of what can be handled for video.

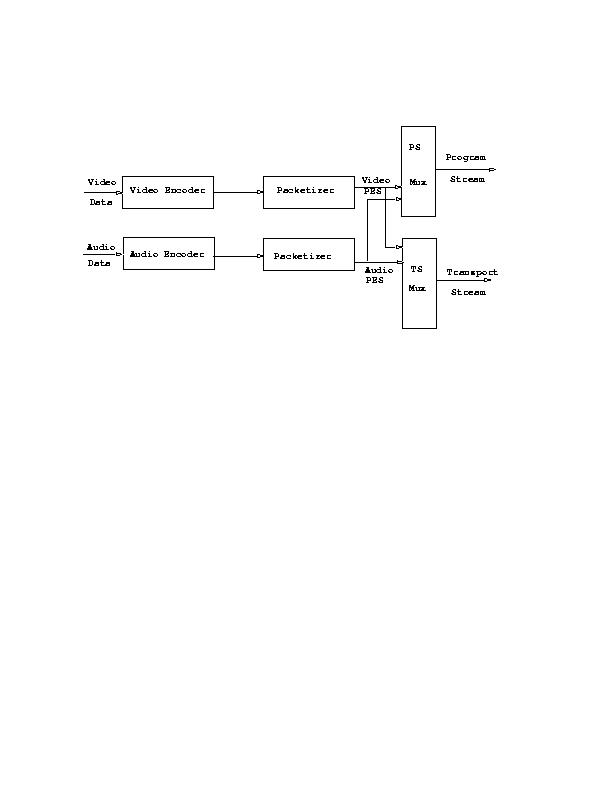

Desktop Systems Model - ISDN style

Picture: conf

However, with judicious optimisation of the implementation of some of the compression schemes described above, it is now possible to encode, compress and transmit a single CIF video stream at 25 frames per second on a workstation with about 50MIPS processing power. The key is to look at the DCT transforms and realize that large chunks of them can be done in table lookup form, at the expense of memory utilization (but then if you are using a lot of memory for video anyhow, this is not that significant).

Encoding/Compression versus Decoding/Decompression

The most expensive part of the transform in the encoder/transmitter side is the frame differencing (differencing of the DCT coded blocks), since this involves a complete pass over the data (frame) every frame time (say 25 times per second over nearly a Megabyte). It turns out that this, and motion prediction if employed, are really I/O intensive rather than strictly being CPU/Instruction intensive and are currently the main bottleneck.

In the meantime, the receiver/decoder/decompression task is a lot easier, possibly as much as 10-25 times less work. This is simply because if there is no change in the video image, no data arrives, and if there is a change, data arrives, so the only work is in the inverse DCT (or other transform) plus copying the data fro the network to the framebuffer. Basically, a modest PC can sustain this task for several video streams simultaneously.

Encoding/Compression versus Decoding/Decompression

When we want to network our audio and video, again we are up against the limits of what can be done under software control now. There are implications for source, link, switch and sink processing, in terms of throughput, although for compressed video, most modest machines are now pretty capable of what’s required. But in terms of reconstructing the timing of a multimedia stream, there are a few tricky problems. These can be solved as we’ll see later, but there are basically two approaches:

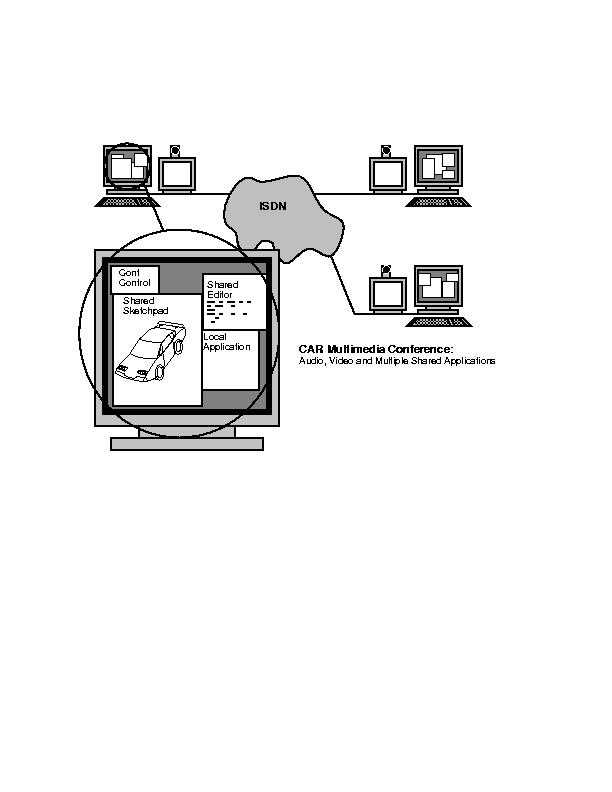

Here we illustrate the two basic approaches - use of a constant bit rate CODEC and circuit based network:

Picture: codec+hp

And use of software and packetizers and a Packet Switched network:

Picture: hardware-arch-psdn

There is no doubt that special purpose hardware is needed for some multimedia tasks. The shear volume of data that must be dealt with, and the CPU intensive nature of much audio and video processing means that some special purpose devices are needed. Some of these are purely in the digital domain, some sit between the analog and the digital, and others are most cost effective in the analog realm.

Digital Signal Processors and Graphics Co-processors

DSPs are specially designed chips that are basically miniature vector processors good at the set of tasks that audio, and video, signal processing involve - typically, these involve a repetitive sequence of instructions carried out over an array of data - e.g. fast Fourier or other transform, or matrix multiplication (to rotate or carry out other POV transforms), or even to render a scene with a given light source.

Coder/Decoder cards in workstations vary enormously in their interface to the a/v world, as well as their interface to the computer.

Some CODECs do on card compression, some don’t. Some replace a framebuffer, while others expect the CPU to copy video data to the framebuffer (or network).

Some include a network interface (e.g. ISDN card in PC video cards). Some include audio with the ISDN network interface (the chipsets are often related or the same).

Most that carry out some extra function like this are good for their alloted task, but poor as general purpose video or audio i/o devices.

Nowadays, most UNIX and Apple workstations have good audio i/o, at least at 64kbps PCM, and sometimes even at 1.4 Mbps CD quality. Most PC cards are still poor (e.g. the soundblaster card is half duplex - not much use for interactive PC based network telephoning).

There are low price framegrabbers available, that often operate as low frame rate video cards.

It is often useful to be able to choose or mix audio (or video) input to a framegrabber or CODEC. However, by far the cheapest and most effective way to do this is by getting an analogue mixer. To mix n digital streams requires n codecs. Sometimes, within a building, one wishes to carry multiple streams (even of analog and digital) between different points. Again, appropriate broadband multiplexors may be cheaper than going to the digital domain and using general purpose networking - the current cost of the bandwidth you need is still quite high. If you want 4 pictures on a screen, an analog video multiplexor is an inexpensive way of achieving this, although this is the sort of transformation that might be feasible digitally very soon for reasonable cost.

Currently, most mikes and cameras are pure analog. Mikes are inexpensive and audio codecs becoming commonplace in any case. But cameras could easily be constructed that are pure digital, by simply extracting the signal from the scan across the CCD area in a video camera. There are a couple of such devices coming on to the market this year.

Interactive audio is nigh on impossible if a user can hear their own voice more than a few 10s of milliseconds after they speak. Thus if you are speaking to someone over a long haul net, and your voice traverses it, turns around at the far end, and comes back, then you may have this problem.

In fact, echo cancellors can be got which go between the Audio out and in, and sense the delay in the room between the output signal on speakers and the input on a mike. If they then simply introduce the same signal but with its phase reversed, with that delay, to the input, then the echo is (largely ) canceled.

Unfortunately, it isn’t quite that simple!! The signal arriving at the speaker is transformed by the room, and may not be easily recognized as the same as that picked up by the mike. However, this might not matter if a calibration signal can be used to set up the delay line.

Failing this, many systems fall back on a conference control technology, using either a master floor control person who determines who may speak when (see below) or a simple manual "click to talk" interface which disables speakers in the users room.

Multimedia conferencing

Conferencing models—centralised, distributed, etc.

There are two fundamentally different approaches to video teleconferencing and multimedia conferencing that spring from two fundamentally different philosophies:

Conferencing models - centralised and distributed

This is based around the starting point of person to person video telephony, across the POTS (Plain Old Telephone System) or its digital successor, ISDN (Integrated Services Digital Networking). The Public Network Operators (PNOs, or telcos or PTTs), have a network already, and its based on a circuit model - you place a call using a signaling protocol with several stages - call request, call indication, call proceeding, call complete and so on. Once the call has been made, the resources are in place for the duration of the call. You are guaranteed (through expensive engineering, and you pay!), that your bits will get to the destination with:

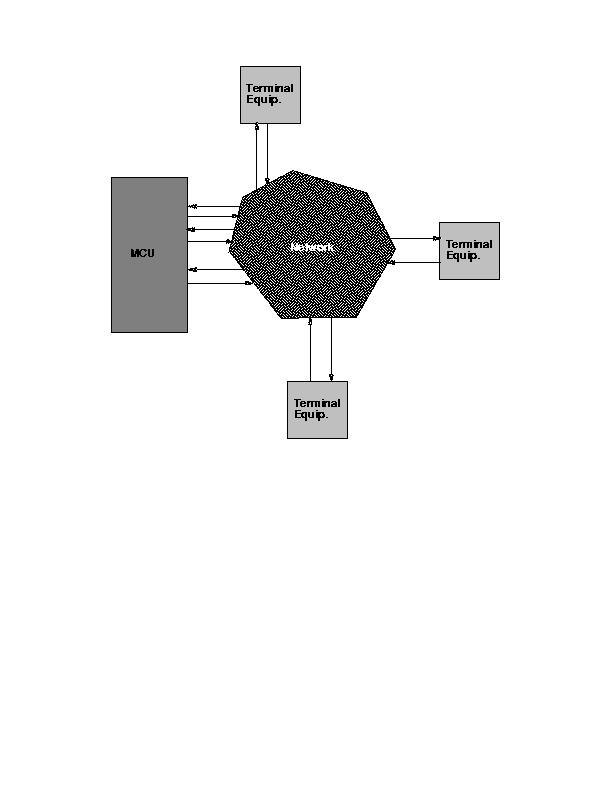

Multisite Circuit Based Conferencing - MCUs

There are two ways you could set up a multisite conference:

With this latter approach, each site has a single CODEC, and makes a call to the MCU site. The MCU has a limit on the number of inbound calls that it can take, and in any case, needs at least n circuits, one per site. Typically, MCUs operate 4-6 CODECs/calls. To build a conference with more than this many sites, you have multiple MCUs, and there is a protocol between the MCUs, so that one build a hierarchy of them (a tree).

Which site’s video is seen at all the others (remember it can be only one, as CODECs for circuit based video can only decode one signal), is chosen through floor control, which may be based on who is speaking or on a chairman approach (human intervention).

Multisite Circuit Based Conferencing

The diagram illustrates the use of an MCU to link up 3 sites for a circuit based conference:

Picture: hardware-arch.ps

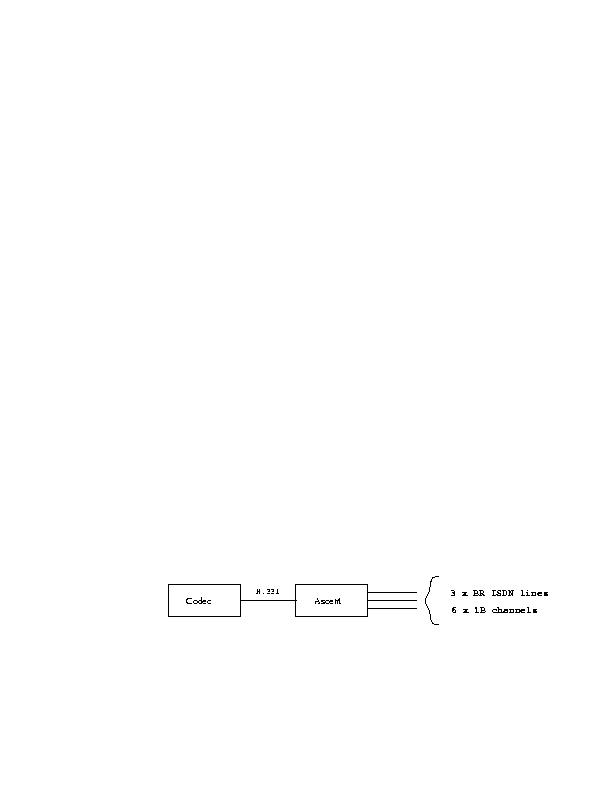

Should greater than basic rate ISDN be needed, it can be combined via a BONDing box as shown:

Picture: ascent

Multicast Packet Based Multisite Conferencing

In a packet switched network, all is very different from the ITU model.

Firstly, on a Local Area Network (LAN), a packet sent can be received by multiple machines (multicast) at no additional cost. Secondly, as we pointed out earlier when looking at the performance of compression algorithms, it is possible for the same power machine to decode many more streams than it encodes. Hence we can send video and audio from each site to all the others whenever we like. A receiver can select which (possibly several or all) of the senders to view.

Thirdly, an audio compression algorithm may well use silence detection and suppression. This can be used for rate adaption (as we will see later), but primarily, it means that, so long as only one person is speaking at any one time usually, the cost in terms of network utilization for audio at least is hardly any more if we send everything all the time. In many uses of such systems where there are a lot of participants it is common that a lot of them are audio senders only (e.g. a class, a seminar) so this can work very well.

Multicast Packet based multisite conferencing

Picture: protocol.os

Internet Based Multimedia Conferencing

There is one remaining non-trivial difference between circuit based networks and packet based networks, currently, and that concerns resource reservation:

Provided that a network is not actually overcommitted, this is not necessarily a problem. We can still run packet based video and audio over the Internet quite easily.

The key observations are:

Internet Based Multimedia Conferencing

Picture: jitter-effect

If the overall use at minimum quality exceeds the capacity of the network, then this ‘best effort’ approach will not work. But within this constraint it works just fine. Even as the delay goes up, the sources and sinks adapt (as we’ll see later) and the system proceeds correctly. At a certain point, either the throughput will fall below that which can sustain a tolerable quality audio and/or video, or else the delay will become too high for interactive applications (or both!). At this stage, we would need some scheme for establishing who has priority to use the network, and this would then be based on resource reservation, and potentially, on charging.

Floor control is the business of deciding who is allowed to talk when. We are all familiar with this in the context of meetings or natural face-to-face scenarios. People use all kinds of subtle clues, some less subtle, to decide when they or someone else can talk.

In a video conference, the view of the other participants is often limited (or non-existent) so computer support for helping with floor control is necessary (just think of talking to someone who you don’t know, maybe over a poor satellite phone call with a ˝ second delay, then you get the idea, then add 5 other people on the same line!).

Floor control systems can be nearly automatic, triggered simply by who speaks, or they can use the fact that the participants are in front of computers, and have a user interface to a distributed program (either packet based or MCU based) to request and grant the floor.

Picture: floor_change

Access control in conferencing and multimedia in general is complex. In a circuit based system, it can just rely on trust with the phone company, and perhaps the addition of closed user groups, lists of numbers that are allowed to call in or out of the conferencing group.

In a packet network , there are a number of other questions:

These are all dealt with by applying the principle of end-to-end security. Basically, if we encrypt the audio or video, perhaps signing it with some magic value before encrypting it with keys known only to the sender or receiver (or else using a public key crypto system more suitable to multipoint communication), then we can be assured that our communication I private.

It turns out that encrypting compressed video and audio is really very simple for many compression schemes - in the case of H.261 for example, simply scrambling the Huffman codes used for carrying around the DCT coefficients might do!

Public key cryptography is preferred over private key since one has a n easier key distribution problem.

Playout Buffer Adaption for PACKET Nets

It has been asserted that you cannot run audio (or video) over the Internet due to

Delay variation due to other traffic through routers

Loss due to congestion

In fact, both are tolerable up to a point. The delay budget for bearable interaction is often cited as around 200ms. However, for a lecture or broadcast of a seminar, any amount of delay might not matter. The key requirement is to adapt to delay variation, rather than the transit delay.

Given that a sender and receiver are matched at the audio i/o rates, or even if they are slightly askew, a combination of an adaption buffer and silence suppression at the send side can accommodate this.

The receiver estimates the interpacket arrival time variance, using exactly the same technique as TCP uses to estimate the RTT, an exponential weighted moving average calculated from:

mi = mi-1 + g(vi - mi-1)

Then depending whether interaction or a lecture mode are in use, the receiver buffers sound before playing out for 1 or more of these variances. When needing to adapt, silence is added or deleted (rather than actual sound) at the beginning of a talkspurt.

A similar inter-arrival pattern can be used by a video receiver to adapt to a sender that is too fast, or by a decoder of compressed audio or video where the CPU times vary depending on the audio contents!

Playout Buffer Adaption for Packet Nets

Picture:trannsmission.ps

MMCC - the Central Internet Model

It has been argued that the problem with the Internet model of multimedia conferencing is that it doesn’t support simple phone calls, or secure closed ("tightly managed") conferences.

However, it is easy to add this functionality after one has built a scalable system such as the Mbone provides, rather than limiting the system in the first place. For example, the management of keys can provide a closed group very simply. If one is concerned about traffic analysis, then the use of secure management of IP group address usage would achieve the effect of limiting where multicast traffic propagated. Finally, a telephone style signaling protocol can be provided easily to "launch" the applications using the appropriate keys and addresses, simply by giving the users a nice GUI to a distributed calling protocol system.

MMCC and CMMC - central Internet model

Picture: cmmc.ps

Picture: cmmc_sw_mix.ps

CCCP - the Distributed Internet Model

1. The conference architecture should be flexible enough so that any mode of operation of the conference can be used and any application can be brought into use. The architecture should impose the minimum constraints on how an application is designed and implemented.

2. The architecture should be scaleable, so that ``reasonable’’ performance is achieved across conferences involving people in the same room, through to conferences spanning continents with different degrees of connectivity, and large numbers of participants. To support this aim, it is necessary explicitly to recognize the failure modes that can occur, and examine how they will affect the conference, and to design the architecture to minimise their impact.

CCCP - Distributed Internet Model

We model a conference as composed of an unknown number of people at geographically separated sites, using a variety of applications. These applications can be at a single site, and have no communication with other applications or instantiations of the same application across multiple sites. If an application shares information across remote sites, we distinguish between the cases when the participating processes are tightly coupled- the application cannot run unless all processes are available and connectable - and when the participating processes are loosely coupled, in that the processes can run when some of the sites become unavailable. A tightly coupled application is considered to be a single instantiation spread over a number of sites, whilst loosely coupled and independent applications have a number of unique instantiations, although possibly using the same application specific information (such as which multicast address to use...).

The tasks of conference control break down in the following way:

The diagram illustrates the CCCP Model

Picture: cccp_concept.small .

.

We then take these tasks as the basis for defining a set of simple protocols that work over a communication channel. We define a simple class hierarchy, with an application type as the parent class and subclasses of network manager, member and floor manager, and define generic protocols that are used to talk between these classes and the application class, and an inter-application announcement protocol. We derive the necessary characteristics of the protocol messages as reliable/unreliable and confirmed/unconfirmed (where `unconfirmed’ indicates whether responses saying ``I heard you’’ come back, rather than indications of reliability).

Its easily seen that both closed and open models of conferencing can be encompassed, if the communication channel is secure.

To implement the above, we have abstracted a messaging channel, using a distributed inter-process communication system, providing confirmed/unconfirmed and reliable/unreliable semantics. The naming of sources and destinations is based upon application level naming, allowing wildcarding of fields such as instantiations (thus allowing messages to be sent to all instantiations of a particular type of application). The final section of paper briefly describes the design of the high level components of the messaging channel (named variously the CCC or the triple-C). Mapping of the application level names to network level entities is performed using a distributed naming service, based upon multicast once again, and drawing upon the extensive experience already gained in the distributed operating systems field in designing highly available name services.

REQUIREMENTS on CCCP from tools

Multimedia Integrated Conferencing has a slightly unusual set of requirements. For the most part we are concerned with workstation based multimedia conferencing applications. These applications include vat (LBL’s Visual Audio Tool), IVS (INRIA Videoconferencing System), NV (Xerox’s Network Video tool) and WB (LBL’s shared whiteboard) amongst others. These applications have a number of things in common:

These applications are designed so that conferencing will scale effectively to large numbers of conferees. At the time of writing, they have been used to provide audio, video and shared whiteboard to conference with about 500 participants. Without multicast, this is clearly not possible. It is also clear that these applications cannot achieve complete consistency between all participants, and so they do not attempt to do so- the conference control they support usually consists of:

Common Control for Conferencing

Thus any form of conference control that is to work with these applications should at least provide these basic facilities, and should also have scaling properties that are no worse that the media applications themselves.

It is also clear that the domains these applications are applied to vary immensely. The same tools are used for small (say 20 participants), highly interactive conferences as for large (500 participants) disseminations of seminars, and the application developers are working towards being able to use these applications for ``broadcasts" that scale towards millions of receivers.

It should be clear that any proposed conference control scheme should not restrict the applicability of the applications it controls, and therefore should not impose any single conference control policy. For example we would like to be able to use the same audio encoding engine (such as vat), irrespective of the size of the conference or the conference control scheme imposed. This leads us to the conclusion that the media applications (audio, video, whiteboard, etc.) should not provide any conference control facilities themselves, but should provide the handles for external conference control and whatever policy is suitable for the conference in question.

We often have the slightly special needs of being able to support:

These requirements have dictated that we build a number of Conference Management and Multiplexing Centres to provide the necessary format conversion and multiplexing to interwork between the multicast workstation based domain and unicast(whether IP or ISDN) hardware based domain.].

What we need for packet based conferencing:

Where current Conference Control systems fail

The sort of conference control system we are addressing here cannot be:

So what is wrong with current videoconferencing systems?

Specific requirements - Modularity

Conference Control mechanisms and Conference Control applications should be separated. The mechanism to control applications (mute, unmute, change video quality, start sending, stop sending, etc.) should not be tied to any one conference control application in order to allow different conference control policies to be chosen depending on the conference domain. This suggests that a modular approach be taken, with for example, a specific floor control modules being added when required (or possibly choosing a conference manager tool from a selection of them according to the conference).

Special Requirements: A single conference ctl user interface

A general requirement of conferencing systems, at least for relatively small conferences, is that the participants need to know who is in the conference and who is active. Vat is a significant improvement over telephone audio conferences, in part because participants can see who is (potentially) listening and who is speaking. Similarly if the whiteboard program WB is being used effectively, the participants can see who is drawing at any time from the activity window. However, a participant in a conference using, say, vat (audio),IVS (video) and WB (whiteboard) has three separate sets of session information, and three places to look to see who is active.

Clearly any conference interface should provide a single set of session and activity information. A useful features of these applications is the ability to ``mute" (or hide or whatever) the local playout of a remote participant. Again, this should be possible from a single interface. Thus the conference control scheme should provide local inter-application communication, allowing the display of session information, and the selective muting of participants.

Taking this to its logical conclusion, the applications should only provide media specific features (such as volume or brightness controls), and all the rest of the conference control features should be provided through a conference control application.

Special Requirements: flexible floor control policies

Conferences come in all shapes and sizes. For some, no floor control, with everyone sending audio when they wish, and sending video continuously is fine. For others, this is not satisfactory due to insufficient available bandwidth for a number of other reasons. it should be possible to provide floor control functionality, but the providers of audio, video and workspace applications should not specify which policy is to be used. Many different floor control policies can be envisaged. A few example scenarios are:

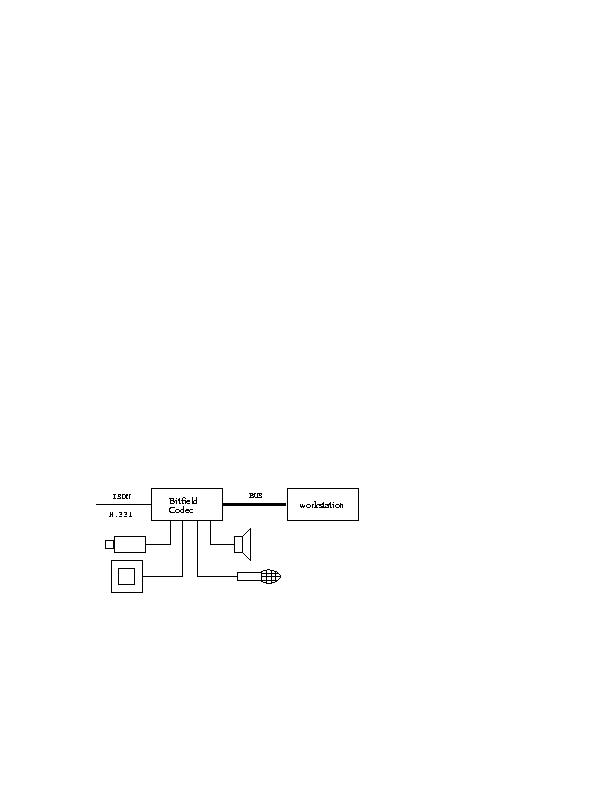

Scaling from tightly to loosely coupled conferences

CCCP originates in part as a result of experience gained from the CAR Multimedia Conference Control system. The CAR system was a tightly coupled centralised system intended for use over ISDN. The functionality it provided can be summarized up by listing its basic primitives:

In addition, there were a number of asynchronous notification events:

Packet Conferencing Requirements

The Conference Control Channel (CCC)

To bind the conference constituents together, a common communication channel is required, which offers facilities and services for the applications to send to each other. This is akin to the inter process communication facilities offered by the operating system. The conference communication channel should offer the necessary primitives upon which heterogeneous applications can talk to each other.

The first cut would appear to be a messaging service, which can support 1-to-many communication, and with various levels of confirmation and reliability. We can then build the appropriate application protocols on top of this abstraction to allow the common functionality of conferences.

We need an abstraction to manage a loosely coupled distributed system, which can scale to as many parties as we want. In order to scale we need the underlying communication to use multicast. Many people have suggested that one way of thinking about multicast is as a multifrequency radio, in which one tunes into particular channels in which we are interested in. We take this one step further and use it as a handle on which to hang the Inter Process Communications model we offer to the protocols used to manage the conference. Thus we define an application control channel.

Conference Control Channel, continued

CCCP originates in the observation that in a reliable network, conference control would behave like an Ethernet or bus - addressed messages would be put on the bus, and the relevant applications will receive the message, and if necessary respond. In the Internet, this model maps directly onto IP multicast. In fact the IP multicast groups concept is extremely close to what is required. In CCCP, applications have a tuple as their address: (instantiation, application type, address). We shall discuss exactly what goes into these fields in more detail later. In actual fact, an application can have a number of tuples as its address, depending on its multiple functions.

Examples of CCC use of this would be:

DESTINATION TUPLE Message

(1,audio, localhost) <start sending>

(*,activity`management, localhost) <receiving audio fromhost:> ADDRESS

(*,session`management, *) <I am:> NAME

(*,session`management, *) <I have media:>{application list}

(*,session`management, *) <Participant list:> {participantlist}

(*,floor`control, *) <REQUEST FLOOR>

(*,floor`control, *) <I HAVE FLOOR>

and so on. The actual messages carried depend on the application type, and thus the protocol is easily extended by adding new application types.

CCCP would be of very little use if it were merely the simple protocol described above due to the inherent unreliable nature of the Internet. Techniques for increasing the end-to-end reliability are well known and varied, and so will not be discussed here. However, it should be stressed that most (but not all) of the CCCP messages will be addressed to groups. Thus a number of enhanced reliability modes may be desired:

It makes little sense for applications requiring conference control to re-implement the schemes they require. As there are a limited number of these messages, it makes sense to implement CCCP in a library, so an application can send a CCCP message with a requested reliability, without the application writer having to concern themselves with how CCCP sends the message(s). The underlying mechanism can then be optimized later for conditions that were not initially foreseen, without requiring a re-write of the application software.

There are a number of ``reliable" multicast schemes available. It may be desirable to incorporate such a scheme into the CCC library, to aid support of small tightly coupled conferences.